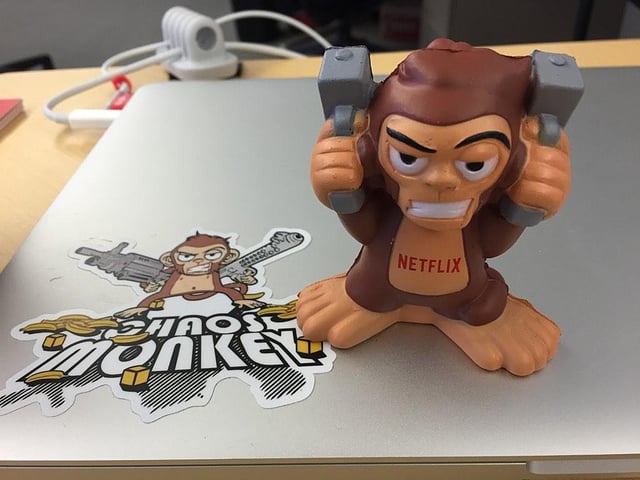

Imagine a bunch of monkeys, running around your data center, pulling cables, trashing routers and wreaking havoc on your applications and infrastructure. Ever more crucial in these days of heated competition between online gaming operators, is player experience. Continuity of operations is “Uber Alles” and avoiding churn, due to service disruption, is an organizational mantra.

That is exactly what motivated the Netflix engineering team, when they thought out IT infrastructure resiliency both hardware and software. Chaos Monkey is a tool invented in 2011 by Netflix designed to test the resilience of its IT infrastructure. It works by intentionally disabling computers and services in your production network to test how remaining systems respond to the outage. Chaos Monkey is now part of a larger suite of tools called the Simian Army designed to simulate and test responses to various system failures and edge cases. It’s also part of a new engineering regime dubbed SRE – Site Reliability Engineering.

A Site Reliability Engineer (SRE) will spend up to 50 percent of her time doing "ops" related work such as incident resolution, on-call, and manual intervention. Since the software system that an SRE oversees is expected to be highly automatic and self-healing, the SRE should spend the other 50 percent of her time on developing tasks adding new features, scaling and automation, making sure the “chaos monkey” is under control.

Why is it fundamental to the player experience?

Continuity of service in the era of the cloud is mandatory and difficult to achieve. There is an infinitesimal number of events that can go wrong. And wrong they will go. Multiple vendors service any online operator’s gaming platform, from CRM, to mobile games and payment servers to name just a few. Any disruption of service affects thousands of players that might churn practically “leaving money on the table” for the operator to lose.

SRE and incident management are all the rage today. As Amazon CTO, Werner Vogels, clearly describes a typical major event causing outage: "You see the symptoms, but you do not necessarily see the root cause of it ... you immediately fire off a team whose task is to actually communicate with the customers ... making sure that everyone is aware of exactly what is happening."

Meanwhile, he continues, "internal teams of course immediately start going off and trying to find what's the root cause of this is, and whether we can repair or restore it, or what other kinds of actions we can start taking.". The orchestration of an event is at the heart of incident managers and SREs when disruption occurs.

The future of incident orchestration

Managing a major incident has transformed from an obscure art into a measurable science. Managing an incident is also about informing customers such as affiliates or partners throughout the event. In the era of immediate satisfaction, customers are not looking for you to tell them: ‘wait hold on’, They demand to be in the loop and in the know. Meanwhile, incident managers are struggling to resolve the issues and make sure transparency governs their action and every stakeholder is informed. Sometimes that means hundreds of people per incident.

Vogels states it very clearly: "I think we can blame ourselves, in terms of not having turned this into sort of a procedure or something that was automated, where we could've had total good control over what the number could be."

This is a key point for Vogels: As you grow and develop, introducing too many points that require human intervention result in points of possible failure. Where possible, automate.

Automation of escalation procedures that kick in as incidents occur, help you master the event and lower time to resolution. Various vendors are adding those capabilities to their monitoring infrastructure. Through various integrations with third party software, legacy operational workflows like ServiceNow and infrastructure monitoring companies such as PagerDuty are adding additional layers of incident management to their service suite. This still leaves the SRE engineer scrambling to deal with multiple ticketing and messaging platforms throughout the incident.

Leading upstarts like Exigence are writing the book anew and redesigning the automation of incident approach around a single pane of glass. The future of incident management is to be found by implementing a single focal point through which SRE teams efficiently focus on incident resolution and post-mortem reporting, rather than spending endless efforts in self-development and integrations.

The future of managing endless software and hardware failure points, is based on proactive site reliability integration between NOC, engineering and developers. It also requires a high level of event automation and preparedness in the face of rising incidents.